When we mention AI, most people think about autonomy and technologies that replicate human senses or actions, such as machine vision, voice recognition, and factory automation. But there is another area of AI that has equal, perhaps more significant potential.

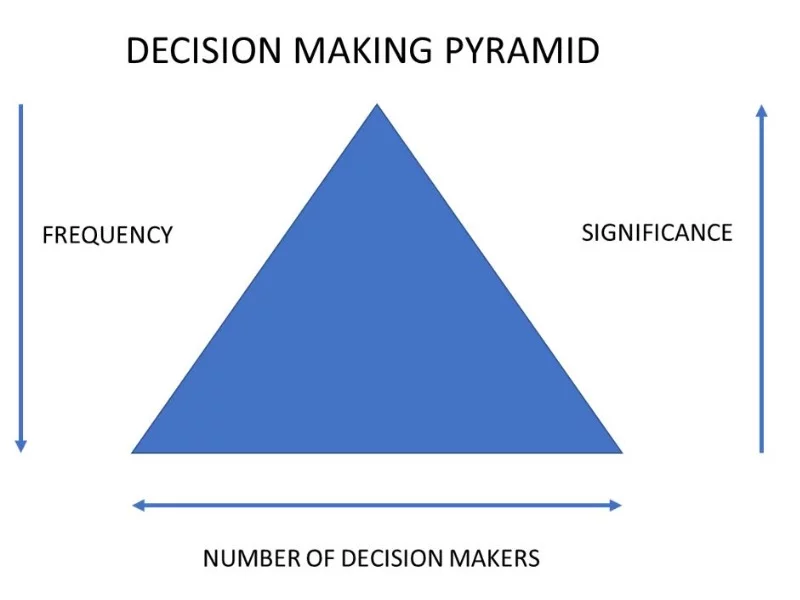

That is replacing or augmenting humans that make decisions. These people are often much better paid than manual workers, and the cumulative impact of their choices can make or break an organisation. I say cumulative because essentially, there is a pyramid of decision-makers or decision-making.

At the top, there is the CEO and board that make infrequent, but hugely significant, decisions; as we go down the pyramid from CEO to managers, to supervisors, the number of decision-makers increase and the frequency increases; at the same time, the significance decreases.

Some of these decisions are simple and repetitive, and others are complex, requiring a high degree of situational awareness, forecasts, experience, and judgment. Business rules assist with the former, providing consistency and the potential for automation.

Simple decisions that are taken by supervisors day-in, day-out, such as a replacement for absent staff, accepting or rejecting a workpiece, whether to work overtime, can be codified as they depend on only a few factors and choice is limited. Even then, they can wrong, or not as good as they could be, but clarity and consistency are often more valuable.

But as we move up the pyramid, decisions become more complicated and significant, and presents the opportunity for AI and similar technologies to provide substantial benefits, specifically:

- Making decisions that add the greatest value

- Making decision faster, with no, or less human effort and involvement

The sorts of decision that AI can significantly assist with have elements of complexity and uncertainty. These include decisions where there are many options, and the solution must obey rules and regulations (internal and external), requirements to deliver to customers, and each option has different financial implications.

This problem is classical optimisation and exists everywhere if we adopt the right frame of reference. AI is very good at solving these problems as it adopts a very accessible strategy of finding a workable, legal, solution, almost immediately, and then refines it to reduce the costs (or increase profit, or revenue, or whatever).

What interests me here, however, is the sort of decision that involves uncertainty and requires an element of judgement, such as deciding on the size of a production run, or making an investment, or accepting a new customer.

These all have risk, good and bad outcomes, and financial impact.

For example, if we produce 1,000 cars, we may sell all of them. Maybe we could have made more, sold most of them, and made more money, but if we make too many more than we can sell, we start to lose money.

In the same way, if we accept all customers, we may have significant payment problems, but as we reject more and more customers, we start to decline too many good ones and reduce revenue.

All these decisions are about maximising the upside with acceptable downside risk.

AI has a wealth of algorithms that can automate or assist with such decisions. The algorithms capture experience in the form of machine learning.

Computers can harvest large amounts of data to create situational awareness, process it with an AI algorithm (such as a decision tree or neural network), and make decisions very quickly.

The advantages over the human decision-maker are the ability to take in all the relevant data and make the decision almost immediately in a repeatable way.

AI also avoids the tendency of humans to ignore facts, decide prematurely (due to time pressure or other priorities), or avoid risk entirely, or too obsessively. The last problem can be very costly and virtually invisible to the organisation.

However, like many endeavours where technology meets reality, there are problems for the uninitiated. You don’t need to look very far for examples where things have gone wrong with decision automation.

Robodebt is an excellent example of an algorithm that very efficiently, and very rapidly, did a great deal of human, reputational and ultimately, financial, damage.

So, for the application of automatic decision-making or decision support, there are two issues that I would like to address:

- How to train AI algorithms

- Does AI replace or assist the human decision-maker?

The first point is not a deeply technical question around the selection of algorithm or what data, or how much data do we need. Good data scientists are all over that.

The vital issue is this; do we train the algorithm to be like the human (and use decisions for the primary input) or do we train the algorithm on outcomes (and use these as the primary input).

It seems evident that we would always use the latter, as that avoids human bias and other issues identified above. But that is, unfortunately, not very common.

The reasons are these: human decisions are clear cut, and we have complete information, outcomes can take time to become apparent, they can be complicated, and we have no data on the results where we did not proceed.

For example, the human rejects a customer for a mortgage; would they have kept up with their payments, or not? We don’t know.

So humans only learn from one sort of mistake (that of deciding to proceed and then having a bad outcome), but we should try to do better with AI.

Also, a bad outcome may be the result of an unforeseeable event. This could be a financial crisis or pandemic, or it could be completely unexpected: we might expect a freelancer to lose their job, but not a government servant. We have to determine if an outcome is bad, or is it just a good outcome, gone wrong for some other reason?

The point is this: it takes patience to wait for outcomes and effort to analyse them. And we also have the problem of training the algorithm.

We don’t have complete data, so we have to find a way to estimate the likely outcomes for rejected applications; otherwise, the algorithm may be biased. This issue is not limited to a decision around customer acceptance; the same is true with other decisions.

For example, if we reject a workpiece, we don’t know if a customer would have complained. Or if we decide not to open up at the weekend, we don’t know how much we would have taken.

So training AI to emulate humans is a lot easier than training to achieve good outcomes. But that won’t give us the best result (unless, by sheer good luck, we have a human that is 100 per cent accurate). I believe that if we are to get the best algorithm and subsequent implementation, we have to use outcomes, regardless of the complexities identified above.

The second issue is around the deployment of AI within an organisation.

Do we seek to replace the human, or do we use AI to assist? For example, a potential deployment strategy is to use AI for triage purposes. If we have 100 decision to make, maybe AI can deal with the ‘easy’ or ‘obvious’ ones and leave the human with only a fraction of the workload.

But easy and obvious is subjective and necessarily requires human judgment, and that defeats the purpose. Even the simple triage strategy needs something better.

I landed upon these two questions when I completed research into business rule optimisation [1].

There are many situations where we can use rules or AI to make or assist decisions, such as project approval, investment appraisal, customer acceptance, and even in health and human services with triage, diagnosis and treatment options.

With enough data, AI can provide cost-effective decision-making or decision support.

The solution is to use joint probabilities. The critical probabilities are those that a human will decide to proceed, and be correct, or that they decide to proceed, and are wrong.

The first allows us to determine the potential upside, and the send is the associated downside, and the potential profit is one minus the other.

It isn’t just about accuracy; we can be very accurate by hardly ever proceeding, but that gives a very poor upside. It is the combination of the two, and there is maximum value to be had.

Given any historical data, we can estimate these probabilities using decisions and associated outcome. We can do the same for machine learning by applying the algorithm to historical data and evaluating its performance.

So, we can estimate the net potential benefit of giving the decision to the human versus the AI system and may the best one make that decision. Also, the human needs to get paid, so we include their cost in the calculation.

My conclusion is straightforward. There is great potential for AI to help organisations make better decisions, faster and at a lower cost. But worry less about the technology and more about how it is applied. Think carefully about the data used to train the algorithms and how we deploy AI to replace or assist human decision-makers.

Alan Dormer is the chief executive officer of Australian decision-support software developer Opturion.

Do you know more? Contact James Riley via Email.