Australia’s eSafety commissioner says technology giant Apple can expect government regulation if it continues to pause the roll out of controversial technology that would have scanned iCloud photo libraries for child sexual abuse material and shielded children from explicit content on their iPhones.

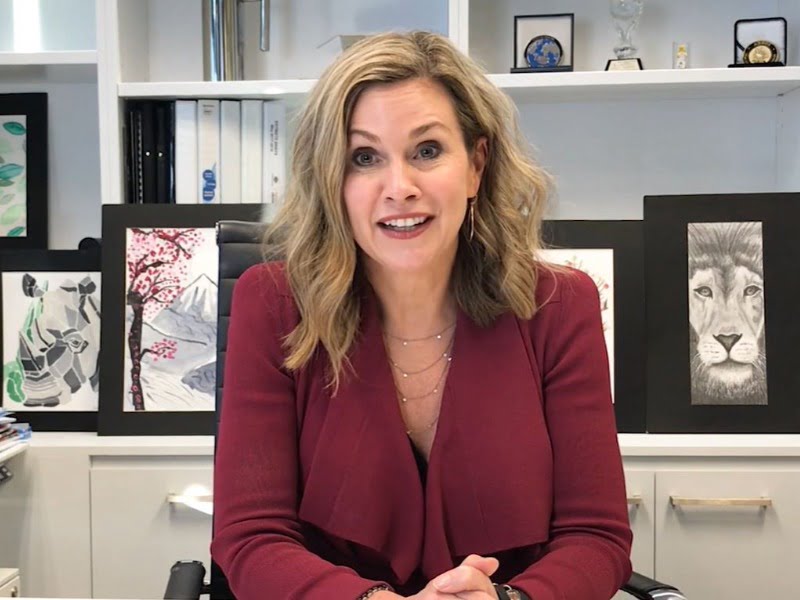

Julie Inman Grant, who commenced her five-year appointment as Australia’s eSafety commissioner in January 2017, said “Apple totally caved on doing the right thing”.

Ms Inman Grant — who has worked for various tech companies including Adobe, Twitter and Microsoft —was responding to a statement from Apple, released late on Friday night Australian time, that the company would pause its recently announced child-protection initiatives.

“Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material,” an Apple spokesperson told US tech publications. “Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

The technology was designed to identify photos uploaded to Apple’s online storage service iCloud that match against known child abuse imagery. To work, it uses a “hashing” algorithm of known abuse material to identify imagery on people’s accounts and then alerts Apple reviewers when 30 child sexual abuse images are reached.

Another separate feature would’ve allowed parents to have naked or sexually explicit imagery blurred on a child’s phones.

Privacy advocates said they were worried the technology could be trained to search for other types of content stored on people’s phones if governments pressured Apple to do.

“All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts,” the Electronic Frontiers Foundation said when it was first announced in early August.

Responding to Apple’s pause, eSafety commissioner Inman Grant said on Twitter that the move was “a chance at real industry leadership” which had now “failed”.

“Having spent more than 22 years inside the tech industry, I observed that revenue, reputation & regulation tends to dictate these decisions. Regulation, here we come!”

She said it was “too important not to respond and expect better – from all participants in the technology ecosystem”.

“It’s our children who suffer as a result.”

Ms Inman Grant later pointed InnovationAus to Australia’s Online Safety Act, which takes effect from January 23 next year, as being regulation Australia has in its arsenal.

Thorn, a US company which develops technology to defend children from sexual abuse and is co-founded by celebrities Ashton Kutcher and Demi Moore, said it was disappointed by the pause.

“Our expectation of Apple is that they publish a detailed timeline and clear deliverables to demonstrate how they will maintain their commitment to improve their child safety measures and implement scalable detection of child sexual abuse material in iCloud Photos,” it said in a statement.

“We can create solutions where the privacy rights of all people – adults and children – are balanced and respected. If this pause means Apple can deliver an even stronger solution, then we look forward to working with them to strengthen their efforts.

“Inaction is not an option. Every child whose sexual abuse continues to be enabled by Apple’s platforms deserves better.”

The US Electronic Frontiers Foundation said it was “pleased” Apple was “now listening” to concerns, but reiterated its call for the technology to be abandoned.

“The features Apple announced a month ago, intending to help protect children, would create an infrastructure that is all too easy to redirect to greater surveillance and censorship,” said Electronic Frontiers Foundation executive director Cindy Cohn.

“These features would create an enormous danger to iPhone users’ privacy and security, offering authoritarian governments a new mass surveillance system to spy on citizens.”

Do you know more? Contact James Riley via Email.